DRAFT: From PyParallel to Python Free-Threading

Optimally Exploiting Multiple Cores with Python

This article takes a look at the new no-GIL, free-threading functionality introduced in Python 3.13, as well as how it compares and performs against PyParallel’s attempt at explointing multiple CPU cores over ten years ago. Using a demo project named Parallelopedia, we demonstrate the benefits afforded by the new functionality, whereby large data structures (tens to hundreds of gigabytes) are loaded in main memory and then accessed in parallel from Python—a feat not previously possible with the contemporary multiprocessing approach. Additionally, we investigate multi-threaded, parallel inference (generation) of transformer-based Deep Neural Networks via PyTorch, and explorer the limits of what can and can’t currently be done, as well as a roadmap for future PyTorch support.

This article is sponsored by Meta in collaboration with Quansight and OpenTeams.

Introduction

Since it’s inception, Python has had a Global Interpreter Lock, or GIL, that prevented more than one thread running Python code at the same time.

Python 3.13, released in October 2024, is the first version of Python to introduce support for a “no-GIL” free-threaded mode, per PEP-703 Making the Global Interpreter Lock Optional in CPython, unlocking the ability for multiple Python threads to run simultaneously.

This allows, for the first time since the language’s inception in December 1989, a single Python process to saturate all CPU cores in parallel with pure Python code (i.e. not farming out to extension modules written in C, C++, or, more recently, Rust).

I can’t overstate how exciting this is! For decades, countless attempts have been made to solve this problem—including my own efforts with PyParallel—but no-one had prevailed in getting a solution mainlined into CPython. Until now!

I will admit I am biased: I am a Python committer (though not directly involved in this work), I adore the language, and have spent years obsessing over the dream of a parallel Python. It’s a topic I’m incredibly passionate about, and this article will unabashedly proselytize the extraordinary engineering that finally made a free-threaded Python a reality.

Thankfully, it’s not just blind, uninformed enthusiasm. Over the past few months I’ve had the opportunity to extensively test-drive free-threaded Python to prepare for this article. The verdict? I think it’s phenomenal. Both the implementation and performance on real-world problems. Free-threaded Python removes a significant limitation, making it possible to tackle a wide range of problems that were previously out of reach. I genuinely believe this is the best thing to ever happen to the Python language over the last three decades, and it will solidify its position as one of the most dominant programming languages for the next three decades. After all, we’re not getting fewer cores.

And this is essentially just the first-pass! Certain trade-offs had to be made to deliver the core functionality within a reasonable time frame—like disabling the non-thread-safe functionality introduced by PEP-659 Specializing Adaptive Interpreter, which is the feature responsible for delivering large speedups in the last few prior releases. However, this is on track to be re-enabled in free-threaded mode for Python 3.14, allowing multi-threaded code to also benefit from the same speedups single-threaded code has enjoyed the past few years.

The goal of this article is to review the technical details of the implementation, with some comparisons to prior work such as PyParallel. I’ll then cover the types of problems that are now incredibly well-suited to be tackled with a free-threaded Python, using a demo project I’ve created called Parallelopedia to provide a basis for concrete examples of real-world problems.

Finally, I’ll demonstrate some examples of how PyTorch can be used today with free-threaded Python, with particular focus on parallel inference (generation) on a shared model. Load a model once in a single Python process, replicate it across GPUs of interest, and perform parallel inference from multiple threads without needing to mess around with multiprocessing.

I’ll also cover areas of PyTorch that don’t yet work with free-threading, and the plans for tackling that in the future.

The motivation behind the PyTorch focus is simple: Meta’s PyTorch folks, through their relationship with Quansight, paid for my time to work on this article. Meta was also heavily involved in supporting and funding the free-threaded Python efforts, too; this was also thanks to their relationship with Quansight. So special thanks goes out to Meta for all of their support with both free-threaded Python, and PyTorch overall.

And I’d be remiss if I didn’t thank my friend, colleague, and twice-now boss Dr. Travis Oliphant, CEO of Quansight and OpenTeams, primary creator of NumPy, founding contributor of SciPy, and one of the founders of Continuum.io—now Anaconda, with whom I worked many years ago. After the abrupt layoffs at Voltron Data in late September 2024, I reached out to Travis for work and he snapped me up instantly, putting me to work on the PyTorch project with Andrew James for a few months before I started my new role as a Principle Engineer at NVIDIA on the Core CUDA Compute Libraries team in late January, 2025.

Thank you Meta, Quansight, Travis, and James! And thanks to all the folks involved in delivering free-threaded Python, including the Python Steering Council for taking a calculated risk and accepting PEP-703 Making the Global Interpreter Lock Optional in CPython in the first place.

Background

The Related Work section of PEP-703 does a fantastic job of capturing all of the past attempts at improving Python’s ability at simultaneously leveraging multiple CPU cores.

I believe the first attempt was in 1996, when Greg Stein…

…

David Beazley’s An Inside Look at the GIL Removal Path of Lore.

Larry Hasting’s Gilectomy.

The hilarious Reddit post by user /u/corysama in the thread Describe what developing for each console you’ve developed for is like.. With regards to PS2 development, the following comment always stood out to me:

There are so many amazing things you can do, but everything requires backflips through invisible blades of segfault.

That absolutely encompasses the first few months of developing PyParallel. And then all subsequent months. Larry Hasting’s has a similar sentiment in the Gilectomy README, with the first sentence proudly stating:

Welcome to the exciting world of segfaults and massive slowdowns!

…

Way back in October 2012, there was a discussion on the python-ideas@ mailing list titled asyncore: included batteries don’t fit. The general discussion centered around providing better asynchronous I/O primitives in Python 3.14, and eventually led to the async and yield from keywords being introduced in Python 3.5, released around two years later in September 2015.

That thread was also the genesis for PyParallel; a fork of Python 3.3.5 that I created to implement a proof-of-concept version of the Python interpreter that tackled not only asynchronous I/O but also multi-core parallelism. Specifically, my hunch was that parallelism within the Python interpreter could be achieved when framed through the lens of asynchronous I/O primitives, such as TCP/IP socket servers.

There’s a comprehensive backstory that I’ve placed toward the end such that I don’t detract too much from the main purpose of this article, which is to focus on the exciting new primitives available to use via Python 3.13’s free-threading facilities.

Appendix

PyParallel Backstory

I’m not sure how interesting this section will be to readers, which is why I’ve stashed it at the end of the article. The older I get—especially now that I have a daughter—the more cathartic it is reminiscing about the early parts of my career some nearly-two-decades-ago.

This section captures the backstory behind PyParallel. PyParallel was born out of a) my interest in asynchronous I/O and multithreaded programming, and b) my experience with Snakebite, an “open source network” I built with the goal to provide Python committer’s access to all the different types of platforms Python ran on—especially all the UNIX derivatives from the late 90s to 00s for which I seemed to be genetically predisposed to overly romanticize.

And I came up with the idea for Snakebite as a Python committer, where I found myself often frustrated at trying to debug buildbot breaks on platforms for which I had no access. And becoming a Python committer was an artifact of my involvement with the Python language, particularly with regards to providing buildbots and help with Windows 64-bit Python builds in the early days where AMD64 was still a novelty.

And I was led to Python by a random article by Eric S. Raymond, coupled with growing resentment toward Perl, which I found myself having to use when working with ClearQuest.

That’s the reverse chronological history in a nutshell. The next few sections go into more detail chronologically.

Becoming a Python Committer

From around 2000 to 2004, I was writing mostly C, C++, and Perl. I had founded a company with a business partner, Jamie Echlin, called OnResolve, and provided consultancy for an IBM—formerly Rational—change management product called ClearQuest, as well as a number of software products that extended the functionality of ClearQuest. ClearQuest could be customized in two languages: Visual Basic, and Perl. The most prominent software product Jamie and I created was OnMessage, which was a combination of C++ and Perl, and provided enhanced e-mail notification facilities for ClearQuest. Jamie deserves much of the credit for what eventually became known as OnMessage —despite having a quirky Perl coding style I found difficult to work with, he absolutely laid the foundation for the product and the subsequent success we had with it.

ClearQuest, and its version-control counterpart, ClearCase, were ubiquitous in all of the industries that had the most money in the early to mid 2000s. Particularly finance and oil. No-one liked using either of them, they were expensive, required huge teams to maintain and administer, and with the advent of tools like Git and Github (albeit many years later), they are now relegated to the annals of history.

I was relatively productive with Perl, but I didn’t particularly like it. And I absolutely loathed having to deal with other people’s Perl code. Around late 2004 and 2005, Python arrived on my radar. I remember reading Eric S. Raymond’s Why Python article about Python, and it resonated strongly with me. Coming from Perl, Python just felt simply magical. It was intuitive, easy to read, not cryptic, easy to work with other people’s code, powerful–particularly as a glue language.

I adored it. I tackled all new projects with Python. I wrote a Python wrapper around ClearQuest named cqpython, which I used to carry out one of my most favorite projects to date: merging two ClearQuest databases into a single instance by auto-generating optimized SQL to conduct the merge at the database level. (This was harder than it sounds, as all ClearQuest databases would start off with the same unique ID offsets, so two otherwise unrelated databases would have almost identical keys for different entities, which needed to be resolved efficiently at merge time.)

By 2007-2008, I was bored of ClearQuest—it wasn’t a fun product to work with, nor did it look like it would be a good career move to continue specializing in it; the prices were extortionate, a lot of companies were trying to move off it, and better, cheaper alternatives were popping up.

However, it sure had been lucrative. I was able to enjoy extended periods of not needing to work, and I spent that time on things I was finding really fun, like contributing to Python. I set up a bunch of buildbots for Python, and was particularly interested in getting the 64-bit Windows Python builds “green” on the buildbots I had set up. I think I even paid for the Verisign code signing certificate used for the Python.org official Windows binaries back then (this was still the Python 2.x days).

This was back in the day where Martin von Löwis was still an active contributor for Python, and if I recall correctly, the release manager for the Python 2.x series. Martin also maintained the Windows Python builds, a role that was eventually taken over by Steve Dower, who is still doing it to this day.

In 2008, I attended PyCon in Chicago. It was my first-ever PyCon. I had a fantastic time, and particularly loved the core Python sprints that happened after the conference. For those that are unaware, this is the one time every year where many of the active Python committers, including Guido, get together in a big conference room and just hack on Python collectively for a few days.

It was a bit of a surreal experience, sitting in a room hacking on Python alongside the very founder of Python himself—Guido—plus many other luminaries that actively contributed to the language over the years. I don’t know who took this photo, nor why it appears to have dimensions tailored towards ants, but you can see myself and Michael Ford in the foreground table, Guido in the bright green shirt at the top right table, sitting with Neal Norwitz and Barry Warsaw. And that looks like Brett Canon and Martin von Löwis at the middle table.

I remember inundating Martin with patch bombs fixing various buildbot-related things at the start of that sprint, to which Martin eventually dealt with by simply offering me commit privileges. I enthusiastically accepted! Honestly, that was a career highlight. This was in the days before everything was Github and Pull Requests—becoming a Python committer meant you would literally get svn+ssh access to svn.python.org, plus ssh access to the python.org boxes themselves if you wanted it/needed it (i.e. for buildbot master configuration). This was certainly more compelling than the ClearQuest consultancy I’d done in the past, that’s for sure!

As much as I love Git and Github, getting your pull request accepted for an open source project just doesn’t have the same feel as getting offered commit privileges like back in the old days.

Snakebite: The Open Source Network

The python-ideas@ asyncore discussion

My Hunch

Offline Discussions with Guido

The Genesis of PyParallel

Prior Attempts at Removing the GIL

Removing the GIL without removing the GIL

Windows, Threading, and Asynchronous I/O

The backstory behind that hunch warrants its own article, and I don’t want to detract too much from the purpose of this article, which is to focus on the exciting new primitives available to use via Python 3.13’s free-threading facilities. Suffice to say that circa 2012, having done over a decade of multi-threaded and asynchronous I/O systems programming on UNIX and Windows by that stage, I had pretty strong ideas about how to best write high-performance parallel programs that optimally used multi-threading and asynchronous I/O primitives.

And to the detriment of wide-spread adoption of PyParallel, I was convinced that the sublime facilities provided by the Windows NT kernel for multi-threading and asynchronous I/O

without the restrictions imposed by the Python interpreter’s Global Interpreter Lock (GIL).

def get_page_offsets_for_key3(search_string):

results = []

items = titles.items(search_string)

if not items:

return results

for (key, value) in items:

v = value[0]

o = uint64(v if v > 0 else v*-1)

ix = offsets.searchsorted(o, side='right')

results.append((key, int(o-uint64_7), int(offsets[ix]-uint64_11)))

return resultsColophon

As much as I enjoy reading technical articles, I often find myself equally interested in the tools the author used in the production of the article. In this final section, I attempt to capture details regarding the tools used to author this article, in no particular order.

Quarto & Markdown

This article was written in Markdown using Quarto. The source code is here; there’s also an Edit this page link on the top-right of the page, under the contents (assuming you’re viewing the site on a wide-enough device).

For the syntax color scheme, I copied the dracula.theme from the Quarto repository into tpn.theme and then just hacked on it until I was mostly content with the results.

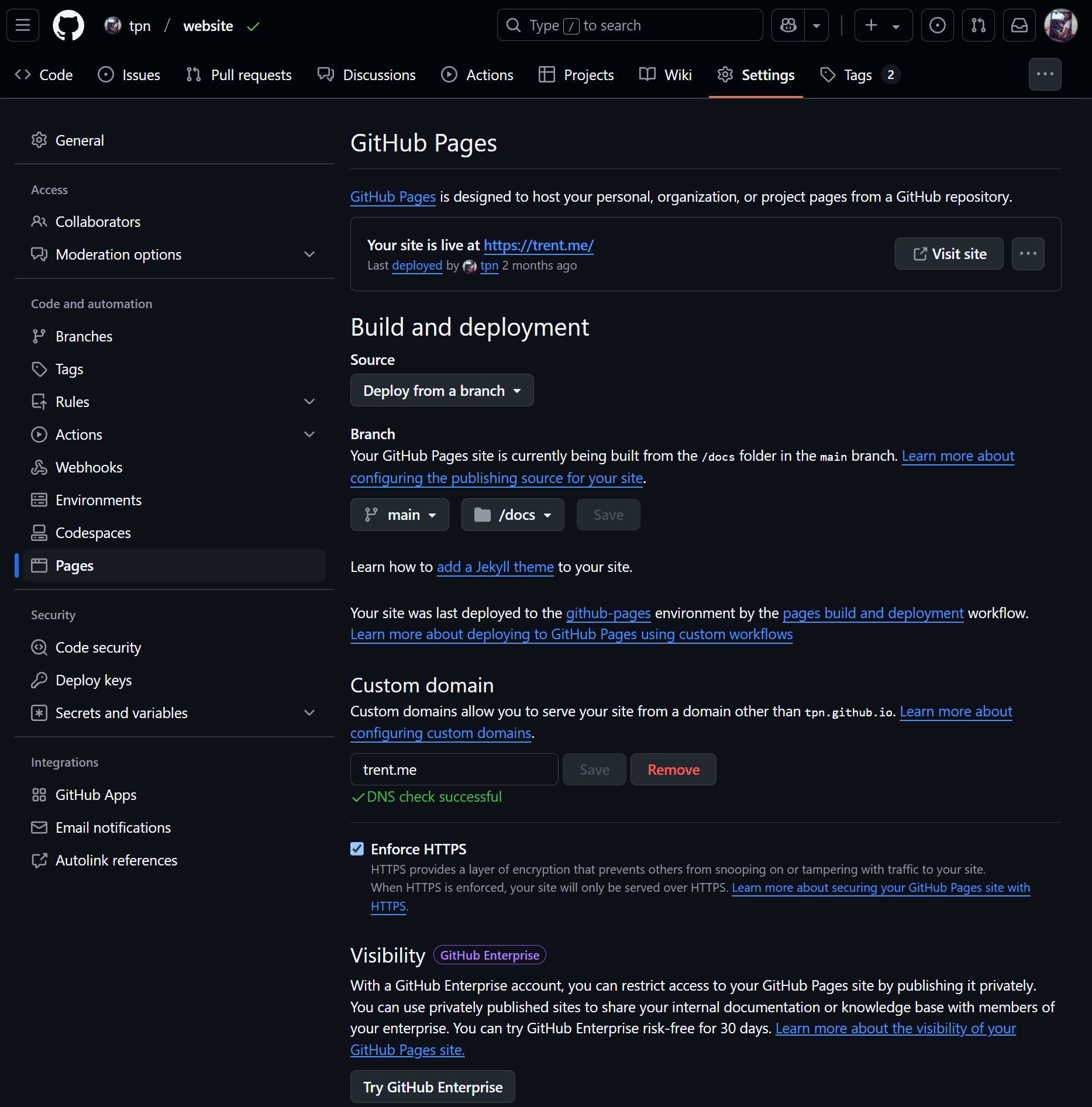

My whole trent.me website uses Quarto, and is hosted publicly on Github at my website repo. I also leverage Github’s hosting facilities via their “Pages” functionality, the configuration of which can be seen below.

“Development” on the website (i.e. writing articles like this one) is done on a variety of boxes, typically all Linux/WSL2. Quarto has a useful preview feature, so my local workflow is usually just something as simple as:

% cd ~/src/website

% quarto previewI edited this index.qmd page predominantly in vim, although I’d sometimes use VS Code and the Quarto extension with integrated previewing. I generally turn Copilot off in vim when writing pure text, it’s too distracting, and isn’t geared toward writing new prose versus coding tasks, which is obviously its bread and butter.

AI Tooling

In general, I for one welcome our new AI overlords. I have heavily integrated Copilot and ChatGPT into my workflow now as a developer for the past two years or so, and I thoroughly enjoy leveraging both tools.

LM Studio

LM Studio has also left a lasting impression and I’ve enjoyed experimenting with it a lot recently too. This has been aided by having a local box at home with 256 GB RAM, 40 cores, four Tesla V100 32GB GPUs, and a 14TB RAID0 stripe—plenty of room to download all of these huge models coming out.

A nice thing about LM Studio is that with a few clicks you can expose a local, OpenAI-compatible REST interface. LM Studio is powered by llama.cpp.

Aider

I came across Aider recently. Aider is, to quote their website, directly, AI pair programming in your terminal. It’s pretty neat, and I played around with having it drive a little bit of the Python development in the parallelopedia repo, and a lot of the development in the parallelopedia-ui repo, which is the React, Bootstrap, JavaScript/JSX web UI seen in this article.

I’m not a web developer, I don’t know how to write web apps, I don’t care about code quality of web apps I do write (as I’m not writing anything other than demos or small little tools), so, whatever gets the job done is fine. However, I am, by trade, a software engineer, so a lot of the core skill set still commutes when working in foreign domains. Especially these days when you have AI an alt-tab away, or, in the case of Aider, available in a terminal to carry out your development requests.

The biggest issue I had with Aider was honestly just biting the bullet and just trying it. Begin, the rest is easy as they say. It’s definitely not perfect–I had to jump in and fix things a few times every session I had with it, but we’re talking maybe five interventions within a 3-4 hour coding session, give or take. It was particularly good at generating scaffolding that I could hack further with a single sentence. And it was really good at writing an entire Python unit test module for me based on a single-sentence.

To get started with Aider:

% python -m pip install aider-install

% aider-install

# Restart shell, or `export PATH=$HOME/.local/bin:$PATH`

% which aider

/home/trent/.local/bin/aider

# I've got my OpenAI keys in a GPG-encrypted file ~/.zsh/openai_key.asc.

% which init_openai_key

init_openai_key () {

if [ -f ~/.zsh/openai_key.asc ]

then

export OPENAI_API_KEY=$(

gpg -d --batch --quiet ~/.zsh/openai_key.asc

)

fi

}

% init_openai_key

% aider --model gpt-4o --vimIt was trivial to point it at a local model hosted by LM Studio, too:

% aider --model lm_studio/qwen2.5-coder-32b-instruct@fp16 --vimBy default, Aider keeps a Markdown transcript of your sessions in a file named .aider.chat.history.md in the root of the repository you’re working with. I have included the transcript from my parallelopedia-ui repo below. I haven’t edited it, so it contains all my mistakes and errors and whatnot.

Comments